A Primer on Programming–The Basics, History, Design & Components for Non-Technical Business Executives

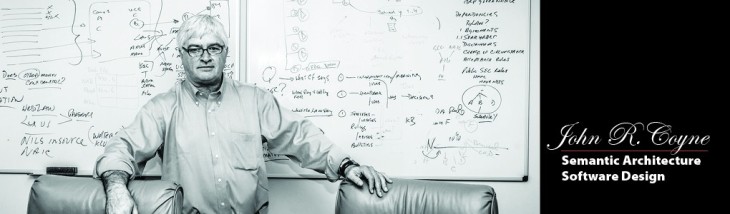

By John R. Coyne, Semantic Computing Consultant

In traditional programming and the Systems Development Lifecycle, a process of gathering information from users to describe needs is translated into a systems analysis, confirmed and then codified—thus producing a System Design.

Then, an architecture or framework to support the system is created.

This will include

- Infrastructure

- Software

- Choice of programming language

- Operating system

- Data elements

- These are called from time to time and potentially modified

Thus, this architecture is then the support system for the system design and all its components.

Programmers perform two fundamental functions:

- They express the user(s) needs in terms of statements of computer functions.

- Embedded in those computer functions are the methods that the computer will need to perform in order to execute them. These are:

- descriptions of data to be used

- networks to traverse

- security protocols to use

- infrastructure for processing

(Summarized at the most abstract level, these could be described as: Transport, Processing and Memory)

This intricate association of descriptions of 1) what the system should do, and 2) how it will do it relies on the programmer and system designer to perform their tasks with precision.

In many cases both will rely on third-party software, the most common of which is a proprietary database.

These proprietary databases come with tools that make their use more convenient. (That is because these databases are complex and, without the tools, the systems designers would have to have intimate knowledge of how the internals of the database systems work.)

Thus, the abstraction allows the systems builder to concentrate on what the user wants, versus what the database system needs to perform its functions.

In the early days of computing, programmers would have to make specifications of the data they needed, test the data and merge or link other data types. Now, databases come with simple tools like “SQL” that allow programmers to simply ask for the data they want. The database system does the rest.

Programs written in programming languages are also abstractions.

How Computer Programming Developed

In the early days of programming, programs were written in machine language, which was an arcane art blending both engineering and systems knowledge. Later, assembler languages were developed as a first level of abstraction. These were known as second generation languages. Even these languages required specialized skills. The next leap was with third-generation languages—the most common of which was COBOL (short for Common Business Oriented Language, which was developed so that people without engineering skills could program a computer).

With machine languages, there was no translation function to have the computer system understand what the programmer wanted it to do. (With assembler languages, there was a moderate translation, but they are so similar to the machine language that little translation is needed).

In third-generation languages, the concept of a “compiler” was created. The compiler takes a computer language that is easy to program in and translates it to a language the computer can use for processing the requirements. During this generation of programming, many third-party tools were developed to aid systems designers in the delivery of their systems and thus a whole industry was born.

Not surprisingly, computers became more complex and, over time, so did the systems that people wanted designed. This complexity drove systems to become almost impossible to understand in their entirety. Eventually, instead of changing them, systems designers simply appended new programs to the older systems and created what is sometimes termed “spaghetti code.”

Eventually, something had to change. Now, after years of research based on artificial intelligence techniques, new tools have emerged that enable a new generation of programming that allows the computer to determine the best resources it needs to do what is requested of it. The science in this is not important. What IS important is that now, the original process of determining what the user wants can be separated from how it gets done.

In semantic modeling, no programming takes place. Rather, a modeler interviews subject matter experts to determine what they want to happen, the best way for it to happen and the best expected results.

Semantic modeling is constructed much like an English sentence, (which is one reason for the term “semantic”). There is a subject, a predicate (or relationship) to an object of the sentence. Like the building of a story, or report, these “sentences” are connected to one another to create a system. Also like creating a report, “sentences” may be used over and over again to reduce the amount of repetitive work. In semantic modeling, these “sentences structures” are called concepts. Concepts are the highest level of abstraction in the program’s “story.”

Like a sentence, the requirements of the system can be structured in near English grammar-level terms.

For instance:

“A Passport (subject) requires (predicate) citizenship (object).”

(The concept that we are dealing with could be “international travel.” This demonstrates the linkages between coding “sentences.”)

“International travel – requires – a passport.” and thus, as has been seen, “A passport – requires – citizenship.”

To expound on our grammatical analogy for programming the system, the same terms delineating a “passport” could be used for “checking into a hotel”:

“Hotel – requires – proof of identity.”

(Identity as a concept can re-use the “passport” sentence.)

“Passport – is a form of – identity.”

(Thus, the speed of development is greatly improved because of the re-usability.)

Also like a sentence, the terms can be graphically represented as a hierarchy—much like sentence deconstruction (diagramming) we learned in high school.

Notice that the terms do not describe how such information is to be found, what order of precedence they have, or how the system is to process such statements. In the “separation of concerns,” the new semantic systems use another mechanism to process the data known as an inference engine.

The inference engine is a logic tool that determines what is needed to accomplish the semantic concepts. The goal of the inference engine is to solve the computing requirements.

Of course, like the databases that have been built, semantic systems come with tools that allow the business user and modeler to describe what the system should be doing, without the need for intimate knowledge of expert systems or artificial intelligence techniques. They simply model. Like the aforementioned SQL statement, the computer takes care of the requirements for satisfying the system requirements.

Underneath all this is the usual figurative plumbing found in computer programming. There are networks to be traversed, data to be called and transformed, reports to write, and computers to process the requests. Today, all of these are now simply services that have been well-understood and are supported by a whole industry of third-party suppliers with proprietary products and an even greater universe of engineers supporting open standards, and even free software available to do these tasks.